Beware False Negatives

Automated accessibility testing tools are rightly wary of giving false positives. You don’t want to flag something as an error in a test only to have the team rebuild an entire thing for no reason (never mind the risk of introducing errors).

We know automated accessibility testing tools can really only capture about 30% of issues. That number is, of course, highly variable based on factors specific to the thing being reviewed. As more complex widgets are built that lean on novel ways of twisting HTML and CSS, it can be harder to validate them with a tool. Especially through all their states.

But what happens when the tools themselves are missing things they should catch? Developers who build a broken thing, but do not have the necessary testing or even standards experience might rely on automated tools and produce problematic content as a result.

This is not new, of course.

Straight-up HTML Violations

Take the following construct:

<button> <h2>A <code><button></code> Holding a Heading</h2> </button>

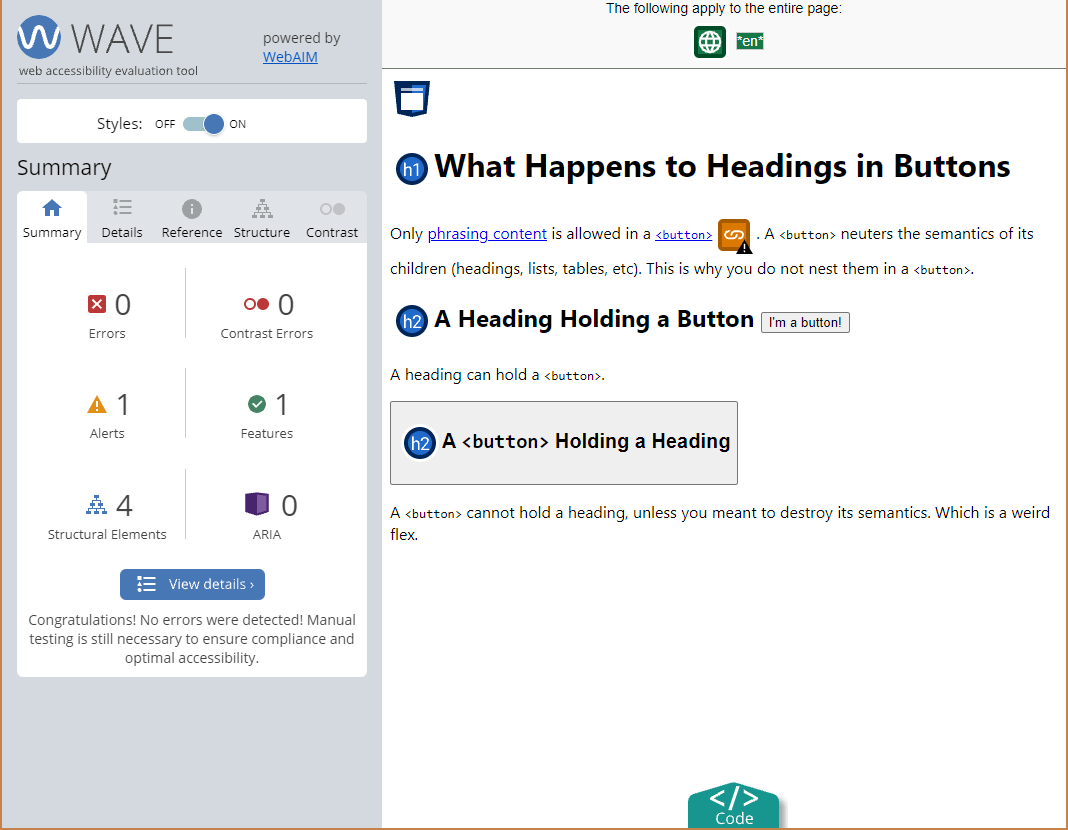

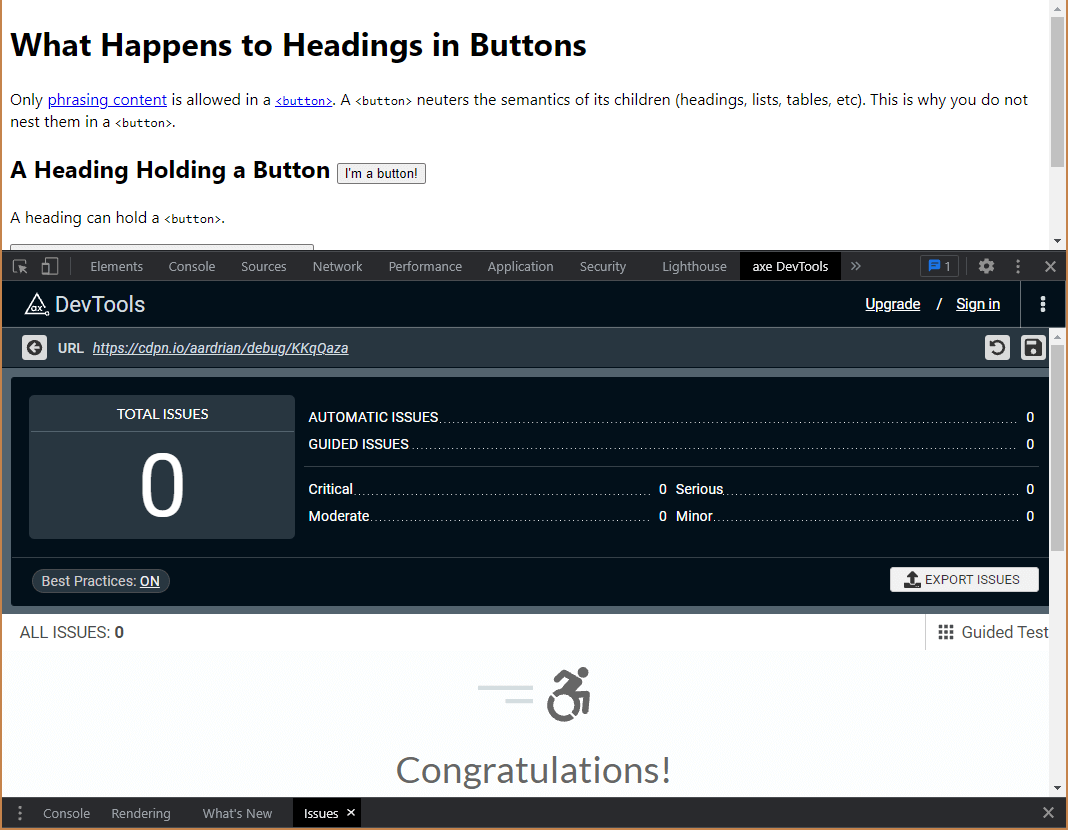

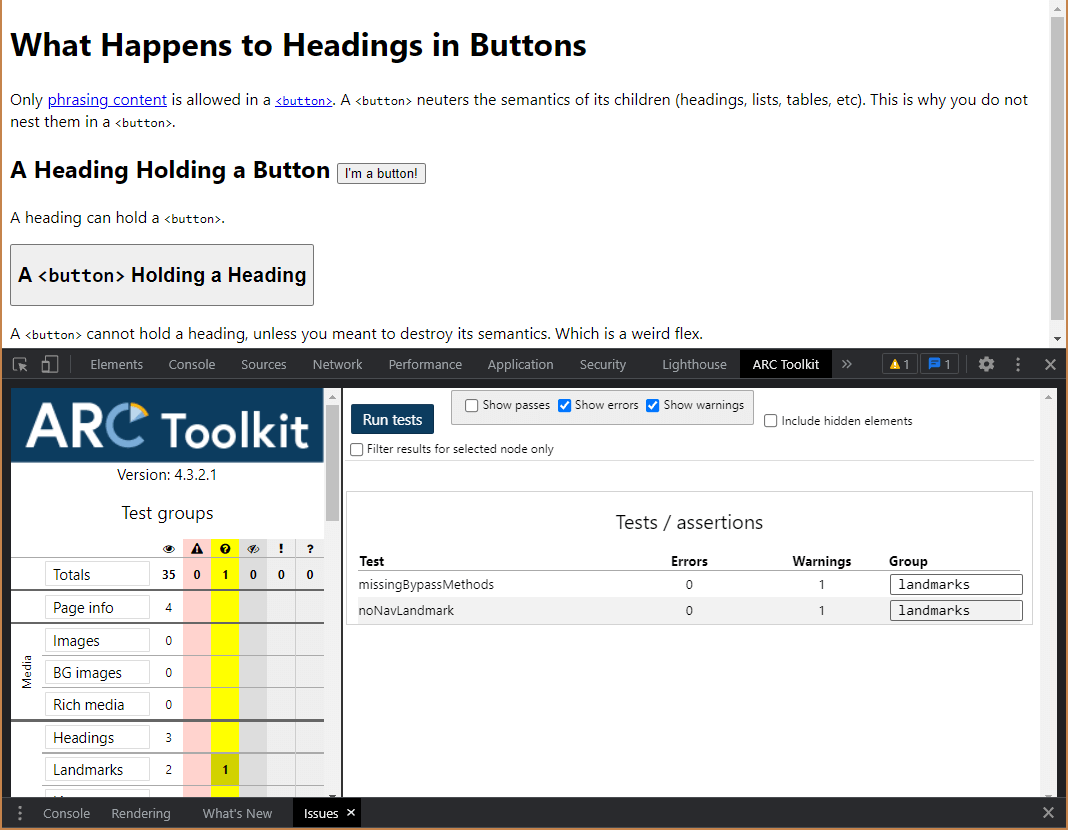

This will not trigger a warning from the major accessibility testing tools — WAVE, Axe, and ARC. If you don’t believe me, I made a demo, which you can also load directly in debug mode and test yourself.

See the Pen What Happens to Headings in Buttons by Adrian Roselli (@aardrian) on CodePen.

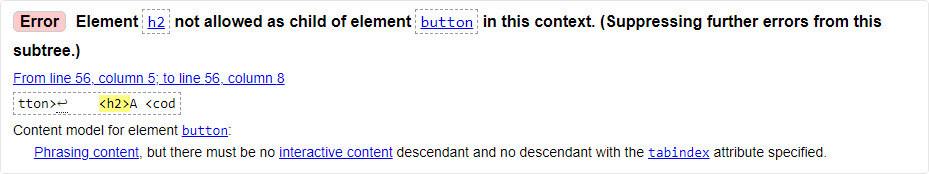

This code will, however, generate an error in the HTML validator. Ideally a code linter would catch it as well. And it rightly should, because this is not only invalid HTML, but it is a 4.1.1 Parsing error under WCAG.

And not only that, it doesn’t act as a heading in Safari with VoiceOver on iOS 14, iOS 15, or macOS 11.4; nor in Chrome with TalkBack on Android 11; nor in Chrome with JAWS 2020 or 2021. NVDA 2021 with Firefox announces the heading, as does Narrator with Edge, both papering over the developer’s mistake. Going off the WebAIM 2019 screen reader survey, that means 70% of users will encounter a broken pattern.

While accessibility practitioners generally try to avoid 4.1.1 failures for stuff that has no impact on users, this has an impact on users.

A developer knowledgeable in HTML could catch this at a glance, and probably would know to never use this construct even without having to confirm its brokenness across a range of assistive technology. An accessibility practitioner should be able to take it further and test it in the common platforms.

Abusing Roles and Not Knowing It

Take the following construct:

<custom-element role="heading" aria-level="2">

<div role="group" aria-label="Expanded region">

<custom-label role="heading" aria-level="3">

A Fake Level 3 Heading

</custom-label>

</div>

</custom-element>

It is not uncommon to see ARIA constructs used to get around linting and validation errors. The HTML validators do not check ARIA (or all of ARIA), so if your goal is to avoid HTML validation errors then ARIA is a great way to game the system. Try it yourself with this debug version of the following demo.

See the Pen by Adrian Roselli (@aardrian) on CodePen.

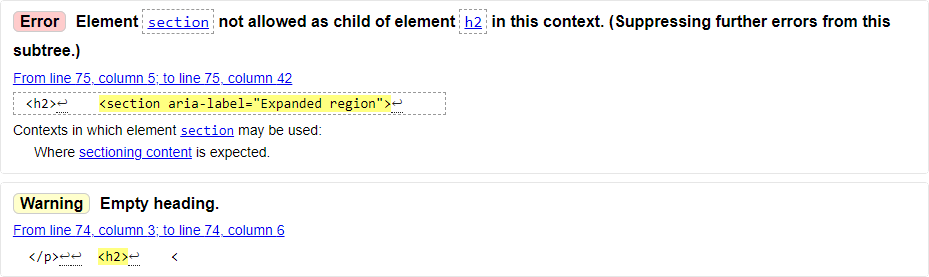

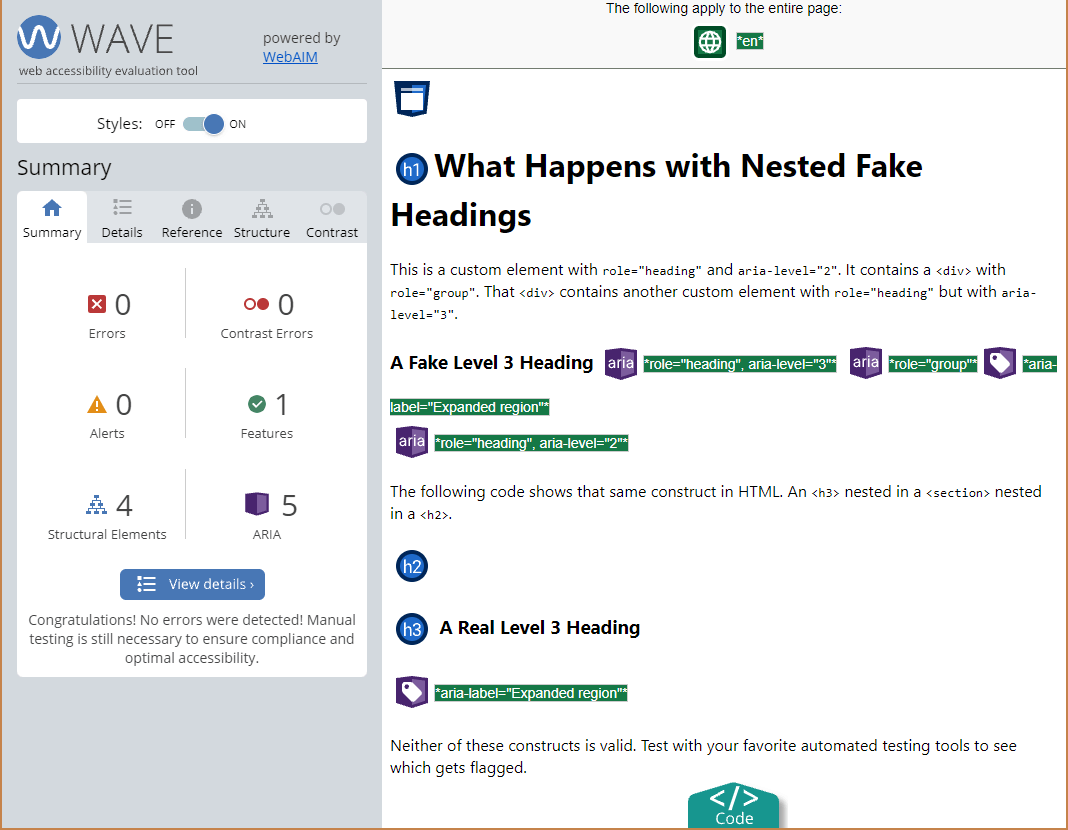

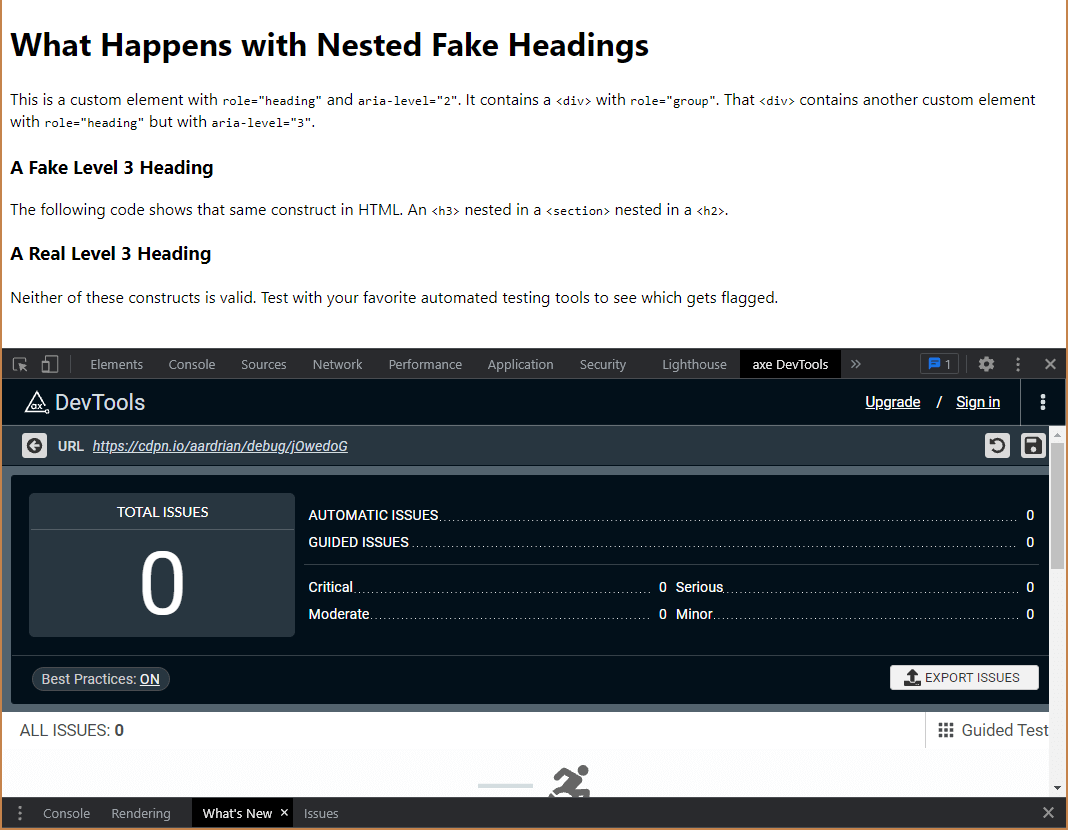

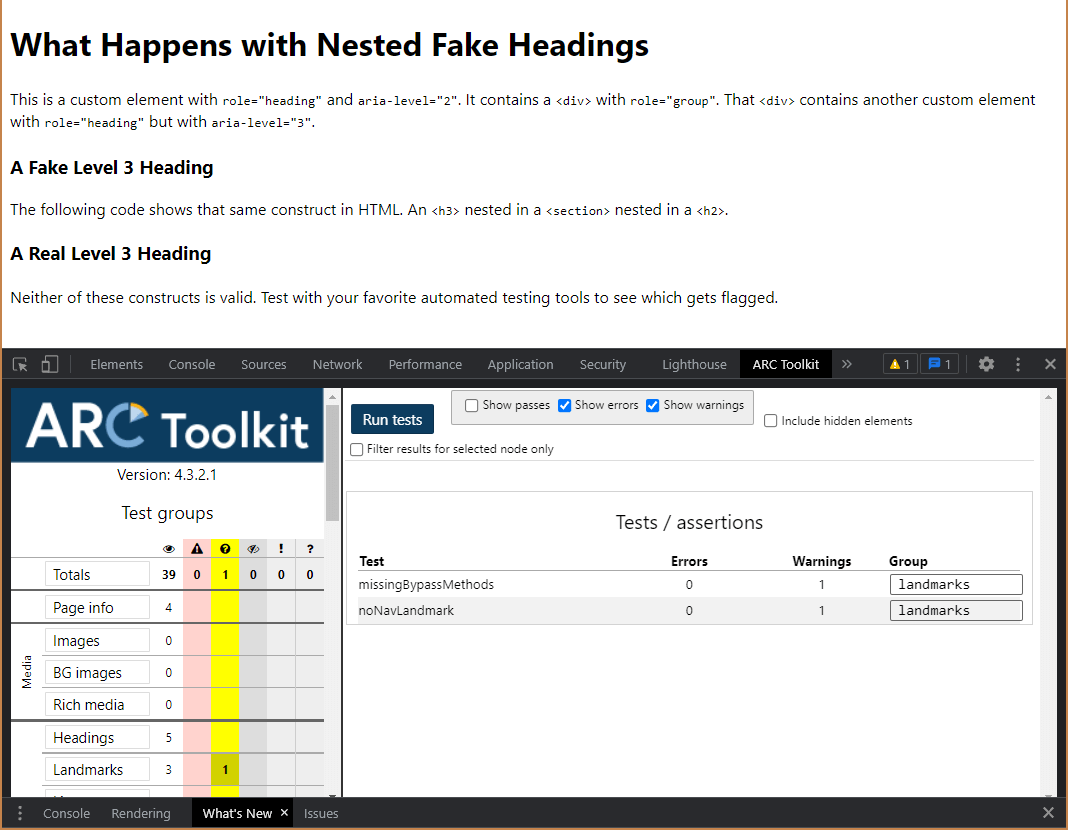

As predicted, the HTML validator fails to flag the invalid ARIA construct. It does, however, note the broken HTML equivalent.

Suppressing further errors from this subtree.Remove that intermediate

<section> and you will get an error that a heading cannot contain a heading.Take a moment to guess how the major accessibility testing tools (WAVE, Axe, and ARC) perform. After all, there is invalid ARIA nesting happening as well as a broken HTML structure on the page that we know the HTML validator catches.

These examples are 4.1.1 Parsing errors. While it is unclear if the author wanted a heading level 3, level 2, a group, or some other combination, it is clear that a screen reader is left to apply its own rules to try to make sense of it.

A related example is when a well-known name in the web dev industry shared a broken drop-cap approach that leaned on a non-standard role (only recognized by VoiceOver) and misused ARIA accessible name techniques. Someone who knew ARIA or tested on more assistive technologies than their dev machine’s default browser would have caught this.

But people trusted this person and integrated it into their work. And when this person revised the code (basing it on mine) and did not acknowledge why they made the change, it became not just de facto gaslighting, but failed to signal to those who had used the broken code that they should maybe go update it.

Abusing Roles and Knowing It

Some of these developer mistakes may be genuine errors from misunderstanding, lack of time to test, lack of understanding of the impact it can have on users, or even just laziness.

But then there is Facebook.

If you have not read the latest example of abusive behavior of this company, then read Facebook Rolls Out News Feed Change That Blocks Watchdogs from Gathering Data and see how Facebook capitalizes on affordances for disabled users to game the system.

The changes, which attach junk code to HTML features meant to improve accessibility for visually impaired users, also impact browser-based ad blocking services on the platform. The new code risks damaging the user experience for people who are visually impaired, a group that has struggled to use the platform in the past.

The updates add superfluous text to news feed posts in the form of ARIA tags, an element of HTML code that is not rendered visually by a standard web browser but is used by screen reader software to map the structure and read aloud the contents of a page. Such code is also used by organizations like NYU’s Ad Observatory to identify sponsored posts on the platform and weed them out for further scrutiny.

Generally I do not hold it against individuals who work for a demonstrably amoral company, especially when they are trying to improve accessibility for those few workers and users who have little choice. In this case, however, I rather hope anyone who works in accessibility at Facebook strongly considers leaving before their skills are used to further harm users, especially disabled users. It seems counter to the reason one gets into accessibility work.

Contrary to the title of this post, Facebook is not a false negative. Facebook is a net negative. Block it at the router. Or the hosts file.

Wrap-up

We all make honest mistakes, and sometimes rely too much on our tools to catch them. But they won’t. This doesn’t mean the tools are worthless, but the people behind those tools may have challenges ranging from strong (questionable) opinions to genuine technical difficulties addressing some of this. If it was easy, accessibility overlays might actually be useful instead of harmful.

Your options are straightforward — learn HTML well, understand the impact of ARIA, and test with more assistive technology than is on your phone. Then pass that knowledge to your team.

Alternatively, if you are intentionally trying to game the system to pass code checks or something as simple as skirt watchdogs in a brazen attempt to subvert democracy while knowingly harming your disabled audience, then you can get into the sea.

Related

- WCAG 2.1 parsing error bookmarklet (2012 and 2019 by Steve Faulkner)

- The HTML Star Is Ignored (and Shouldn’t Be) (2014)

- Uncanny A11y (2019)

- Building the most inaccessible site possible with a perfect Lighthouse score (2019 by Manuel Matuzović)

- Speech Viewer Logs of Lies (2020)

Update: 26 September 2021

Hidde asked a good question about automatically making certain roles focusable, a narrowing of the request to automatically fix detectable accessibility issues:

Accessibility friends… what if browsers would take role=button/role=link as a hint to add an element into tab order and allow activation with keyboard? What would be pros and cons?

Steve Faulkner asked this exact question in August in his post Why not? and got some thoughtful replies.

As this post outlines, developers already mis-use roles, including interactive roles. Some do not understand broken nesting, some choose to do so to bypass linters, and some (Facebook) intentionally mis-use ARIA.

Scott lays out considerations in a response thread:

doing this would also take away legitimate use cases where controls need to be programmatically exposed to AT, but DO NOT need to be in the focus order (and shouldn't need a tabindex=-1) because there are alternative focusable elements to perform the same function

And drops this nugget, which I embed after the tweet:

For example, here's just a QUICK demo of where things 'look' relatively fine with invalid markup, but there can be minor to major impacts for assistive technologies

codepen.io/scottohara/pen/oNwQLNr

twitter.com/scottohara/status/1442134…

Because browsers are papering over poor mark-up, and accessibility testing tools fail to flag it, screen reader users in particular are harmed by the level of correction browsers already provide.

See the Pen invalid markup. what could possibly go wrong? by Scott (@scottohara) on CodePen.

And Pat points out why even relying on what browsers have in place today can lead to “best viewed in” messages on sites.

the problem was that without a documented error correction algorithm, browsers all applied their own mysterious heuristics to fixing borked markup. and unless a developer then tested in all browers (rather than just Chrome or whatever) that led to a myriad of bugs for real users

When developers or even spec writers say WCAG Success Criterion 4.1.1 Parsing (A) is no longer a thing to worry about, they are simply wrong. Especially when self-described accessibility experts promote patterns without testing, without understanding, and without the necessary skill.

Update: 3 October 2021

Since I wrote this post, the ARIA in HTML specification has moved to Proposed Recommendation. Unlike some W3C documents, such as Notes, which exist only as informational documents, ARIA in HTML is clear in its purpose:

[…] This specification’s primary objective is to define requirements for use with conformance checking tools used by authors (i.e., web developers). These requirements will aid authors in their development of web content, including custom interfaces/widgets, that makes use of ARIA to complement or extend the features of the host language [HTML].

Until conformance checkers (such as the ones I cited in this post) implement testing for invalid nesting as in my examples, I encourage all developers to read the section 4. Allowed descendants of ARIA roles. It is arguably easier to read than the ARIA spec itself. For the scope of the examples in this post, the table shows that headings should not be in buttons, and headings cannot contain other headings.

Read Scott O’Hara’s (one of the spec authors) description in his post ARIA in HTML.

8 Comments

What you may want to consider is that a significant number of WCAG testers don’t consider “HTML must be valid” to be part of SC 4.1.1.

If tools were to do as you suggest, and flag all invalid HTML as accessibility issues, there would be an awful lot of accessibility issues that don’t affect people with disabilities at all. Fixing validation issues “just because” is hardly a good use of time.

What you’re arguing for, tools are doing, but they’re doing it selectively. Most of these tools fail invalid ARIA attributes, invalid role values, nesting interactive elements, improper parent/child relationships in tables, lists, menus, etc. Things that actually affect humans.

Historically, this wasn’t the reason SC 4.1.1 was created. This was done because the HTML spec didn’t say how to handle parser errors. That was resolved in HTML 5, which is why many of us feel SC 4.1.1 is obsolete. Standardised accessibility trees, and not having undefined situations in HTML.

In response to . What you may want to consider is that a significant number of WCAG testers don’t consider “HTML must be valid” to be part of SC 4.1.1.

That is evident from the examples I cited.

If tools were to do as you suggest, and flag all invalid HTML as accessibility issues […]

That is not what I suggested. Instead I noted that tools don’t catch validation errors, so folks need to test with AT.

What you’re arguing for, tools are doing, but they’re doing it selectively. […] Things that actually affect humans.

Agreed on the first part (tools are being selective), but disagree on the second part (things that affect humans). I point you to the heading nested in the button example with which I open this post. The tool could have flagged that invalid construct, which demonstrably affects humans, but selectively chose not to.

[…] many of us feel SC 4.1.1 is obsolete. Standardised accessibility trees, and not having undefined situations in HTML.

I understand the background of 4.1.1 and reasoning for removing it. While the example I cite could be arguably flagged under other SCs, it isn’t. This issue could have been flagged if testing tools weren’t so selective about when to validate the HTML. As a result this pattern that affects humans has made it into the wild.

In response to . Thanks for the response Adrian. The button > heading is a pretty interesting example. The ARIA standards says it isn’t valid, yet despite that all major browser with the exception of Safari will handle this markup just fine. So should tools fail things if they don’t work in 1 browser?

I don’t personally think so. Or rather, I think it depends a little on who uses the tools. For a dev actively building that component, yes it would be good to tell them. But should a tool used as part of an accessibility audit that only includes testing with Chrome+NVDA also by default report Safari-only issues? Should government agencies that use tools to find out if an organization may be breaking the law, shortlist a site who’s only problem is that it has sub-optimal heading markup for Safari?

You’re absolutely right that a11y test tool users shouldn’t assume those tools will find everything there is to find. Hopefully, that’s obvious enough. But I feel it would have been worth asking tool vendors why they don’t do as you suggest. It isn’t because they didn’t think of it, or that they don’t understand the standards.

In response to . The button > heading is a pretty interesting example. The ARIA standards says it isn’t valid,…

The construct in the example uses no ARIA. It is invalid per the HTML specification.

…yet despite that all major browser with the exception of Safari will handle this markup just fine.

But it’s not the browsers, at least not completely. Visually they seem to do fine. But programmatically, because it is invalid HTML, they each hand off different information to screen readers, and those screen readers expose that information in different ways.

Which means all the major screen readers (JAWS, TalkBack, VoiceOver), with the exception of NVDA and Narrator, do not expose the heading. That is (roughly) two-thirds of screen readers.

And definitely more than just Safari.

So should tools fail things if they don’t work in 1 browser?

No, that would be silly. They should fail things that are invalid constructs. Like the example.

But should a tool used as part of an accessibility audit that only includes testing with Chrome+NVDA also by default report Safari-only issues?

Of course not. But this post is not about a hypothetical Safari bug. It is about invalid code and tools’ failure to flag invalid code.

Should government agencies that use tools to find out if an organization may be breaking the law, shortlist a site who’s only problem is that it has sub-optimal heading markup for Safari?

Nope. But this is not sub-optimal heading mark-up. It is invalid HTML. It is a bug in the code. A testable bug.

But I feel it would have been worth asking tool vendors why they don’t do as you suggest.

Yes, I could have waded into all the repos (that are open) or sent out emails and waited for responses. But this is an issue now, and folks need to be aware now. Especially as articles with bad advice get circulated and corrections appear a week after that sharing frenzy.

It isn’t because they didn’t think of it, or that they don’t understand the standards.

Agreed, and I think when you said that

they’re doing it selectivelyyou hit the nail on the head. Because of that selectiveness, I am cautioning testers that relying on those tools exclusively will let testable, human-affecting bugs slide past.

A few thoughts, primarily because you suggest deficiencies in a testing tool I administer. Our approach with WAVE is to primarily identify things that impact end users.

For your first example, I believe the proper semantics are provided via accessibility APIs in every major browser I tested. If a screen reader is not properly presenting the heading in your first example, it is because it is applying flawed internal heuristics and not actually reading or applying the accessibility API data from the browser. Which is more reasonable, that we ask every author on the planet to avoid such patterns, or that bugs be filed against the flawed assistive technologies to have this fixed?

I’m not at all opposed to proper HTML validation, but, like Wilco, I’m not sure it belongs in accessibility guidelines and, thus, legal requirements. In recent years I’ve seen only a handful of instances where HTML validation issues have impacted accessibility AND have not been covered by some other WCAG success criteria. I think all or most of your examples result in either 1.3.1, 2.1.1, or 4.1.2 failures.

On the other hand, I have witnessed significant development efforts spent on addressing many millions of HTML validation issues (and thus WCAG “failures”) that have absolutely no impact on end user accessibility. Why? Because WCAG requires it, because someone took a hardline approach to code validation over actual accessibility, because some tool flagged it as an “error”, and/or because people are being sued for the most minor of WCAG violations even when there is no end user impact.

If accessibility testing tools were to “fail things that are invalid constructs”, the vast majority of which have no accessibility impact (and nearly all of the exceptions are due to AT or browser bugs), we’d be further shifting effort away from things that actually improve end user accessibility.

I do agree that ARIA code validation in testing tools needs to be improved. We’re working on this, but I can assure you it is a complex and heavy lift due to the complexities and vagaries of the specifications and disparities in how browsers and screen readers implement both valid and invalid code. As above, our focus with WAVE will always be on identifying things that impact users, and a large portion of ARIA and HTML validation errors simply don’t.

In response to . I appreciate the feedback, and I think it is warranted even though my intent was not to suggest the tools are failing. As Wilco rightly noted, these are difficult to test and tools have to prioritize more impactful warnings.

For your first example, I believe the proper semantics are provided via accessibility APIs in every major browser I tested. If a screen reader is not properly presenting the heading in your first example, it is because it is applying flawed internal heuristics and not actually reading or applying the accessibility API data from the browser.

You can assert the “proper” semantics because you understand the dev’s intent. Devs write other broken patterns with no intent. Browsers may expose those unintended nodes. This is why SRs apply heuristics (good and bad).

Which is more reasonable, that we ask every author on the planet to avoid such patterns, or that bugs be filed against the flawed assistive technologies to have this fixed?

I don’t think this is either/or. Every author should write valid HTML. Every AT should not have bugs. Neither of those will happen, so I am asking authors to test more.

I agree on only identifying things that affect users. This example affects users because it did not function as the dev intended. This is not a fault of the tools.

You quoted my statement,

fail things that are invalid constructs, from a response above. To tweak it, tools should flag things that are invalid constructs. Let the reviewer make the decision, testing with humans and appropriate browser/AT combos.As I say in the post, we (though not all) try to avoid 4.1.1 failures for stuff that has no impact on users. Yet I also agree too many testers file as many WCAG failures as possible. In my experience, frivolous 4.1.1 issues can be dismissed after demonstrating their lack of impact. I am more concerned when they fail web site navigation for not using a

menurole.The 4.1.1 WCAG GitHub discussion that I linked ended with what I thought is the right course of action from Pat:

IF 4.1.1 is to be retained, the understanding document should really clarify the situation a lot more.

Fixing the Understanding document was outside the scope of this post, but I agree that it needs to be constrained to demonstrably user-impacting issues.

In response to . We’re in agreement that focusing on impactful issues is of most importance, but defining reliable and accurate test conditions that identify what this means becomes rather difficult.

Is it the role of testing tools to be the arbiters of what is “impactful” or not? That’s what guidelines are intended to do. I do, however, acknowledge that we’ve decided to NOT flag all 4.1.1 errors because the vast majority are either not at all impactful or are errors flagged under other SCs.

I’m not really interested in going down the never-ending rabbit hole of monitoring browser and screen reader bugs and varying screen reader heuristics, then making generally arbitrary judgement call as to whether those fringe cases that might impact users on some platforms justify a tool error or flag. Even the examples you shared don’t cause any issues on some platforms. The alternative seems to be to flag a much broader set of validation errors that would divert attention to making tools happy rather than users.

Despite the complexities of all of this, I appreciate these conversations and ideas for possible solutions. I’ll think some more on this and bring some ideas to our team for consideration.

In response to . We’re in agreement that focusing on impactful issues is of most importance, but defining reliable and accurate test conditions that identify what this means becomes rather difficult.

We are in agreement on both those points. No “but” needed.

I’m not really interested in going down the never-ending rabbit hole of monitoring browser and screen reader bugs and varying screen reader heuristics…

We are in agreement again.

The alternative seems to be to flag a much broader set of validation errors that would divert attention to making tools happy rather than users.

An alternative could be for devs to use a linter. And/or learn HTML. And/or do AT testing.

For testing tools, the alternative could be to list validation errors (or some sub-set) as warnings.

Despite the complexities of all of this, I appreciate these conversations and ideas for possible solutions.

I appreciate the feedback. The reason I have a comments feature is for peer review, for people to correct me when I am wrong, and to mitigate my one-sided arguments.

Leave a Reply to Adrian Roselli Cancel response