AI-Generated Images from AI-Generated Alt Text

Dear sighted reader, I want you to read this post without looking at the images. Each has been hidden in a disclosure.

Dear sighted reader, I want you to read this post without looking at the images. Each has been hidden in a disclosure.

Instead, read the alternative text I provide and visualize how it may look. Then read the automatically generated alternative text, and try to visualize it then. Consider how they differ.

I took the original alt text and the most descriptive auto-generated alt text and fed each into Craiyon and Midjourney, two self-proclaimed AI tools for generating images from nothing more than a text prompt.

Start comparing the images. As you visually compare each, think about how a screen reader user might have benefited from the more descriptive alt text.

Obviously context matters for what alt text you choose, and the alt text might change for an image as it is used in other contexts.

Flat Color Illustration

A flat color illustration

This is the alternative text for the image:

A cartoonish Kawaii slice of toast with happy eyes, open smiling mouth, and reddish cheeks; there is a spatter of blood coming from the top of the toast similar to the Watchmen logo.

I used these tools to automatically generate the following alternative text:

- Microsoft Office (did not offer me an option for an SVG, so I made a PNG)

Icon

- Microsoft Edge

Appears to be icon, appears to say one

- Google Chrome

- No description available

- Apple iOS VoiceOver Recognition

- (for the SVG)

An illustration of a person’s face on a white surface.

(for the PNG)An illustration of a heart with a face.

I chose the most descriptive auto-generated alternative text and fed it into two image generation tools along with my original alternative text. You can compare and contrast the differences:

My alt text fed into Craiyon for the flat color illustration

My alt text fed into Midjourney for the flat color illustration

Apple’s alt text fed into Craiyon for the flat color illustration

An illustration of a heart with a face.Unlike the effort with the toast, Craiyon went for a happier default expression.

Apple’s alt text fed into Midjourney for the flat color illustration

An illustration of a heart with a face.Midjourney certainly has a vibe.

Photo

A photograph

This is the alternative text for the image (venue location provided in original surrounding context):

Looking across the atrium with four levels of the gallery space / ramp visible. The ramp contains exhibits as well as crowds of people moving among them, with some people leaning over the edge of the ramp wall.

I used these tools to automatically generate the following alternative text:

- Microsoft Office (took two passes)

A group of people in a large room

- Microsoft Edge

Appears to be a group of people in a large room

- Google Chrome

Appears to be: art gallery which includes interior views as well as a large group of people,

(when I arrowed down, then I heard)Solomon R. Guggenheim Museum, The Metropolitan Museum of Art

- Apple iOS VoiceOver Recognition

A group of people walking on a white staircase.

I chose the most descriptive auto-generated alternative text and fed it into two image generation tools along with my original alternative text. You can compare and contrast the differences:

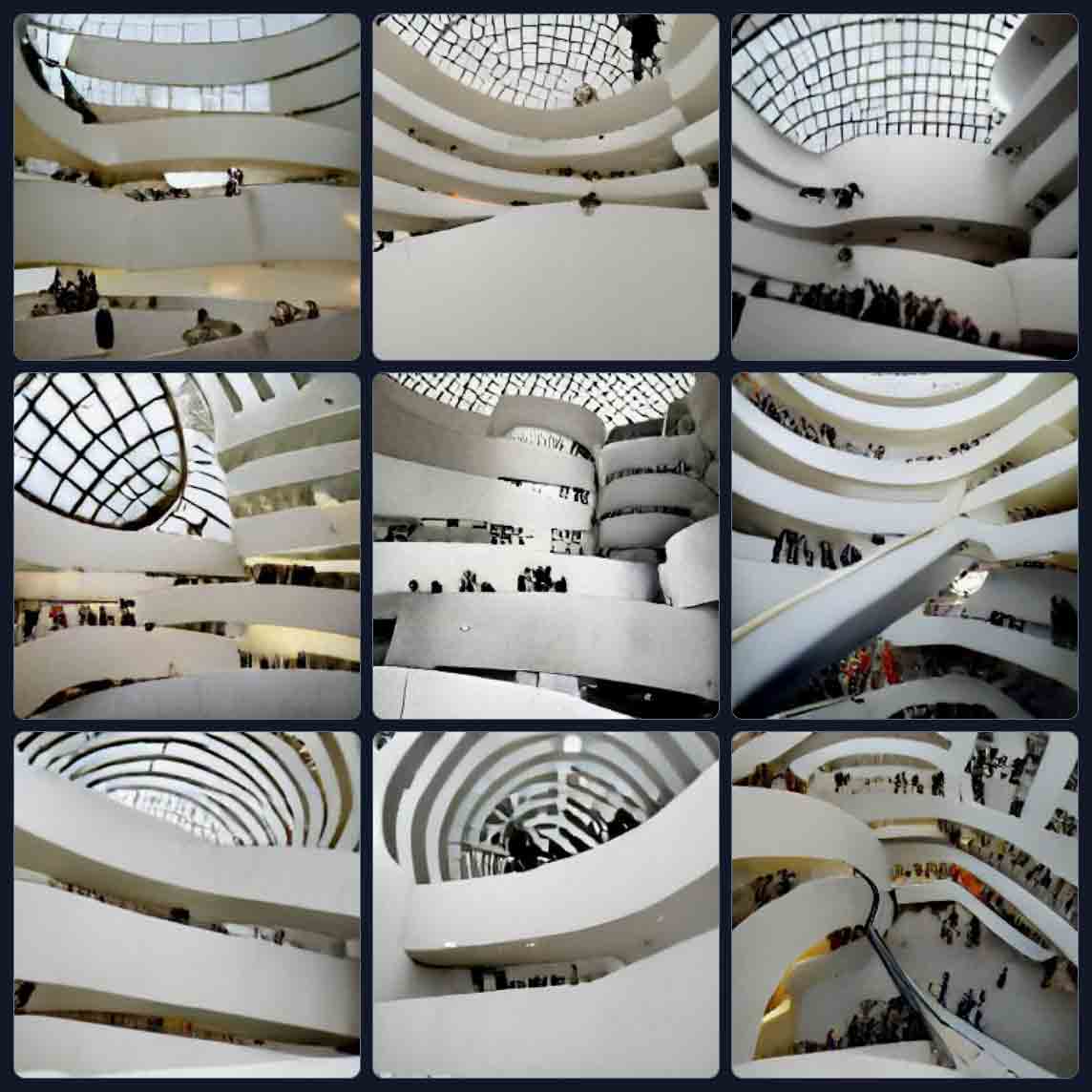

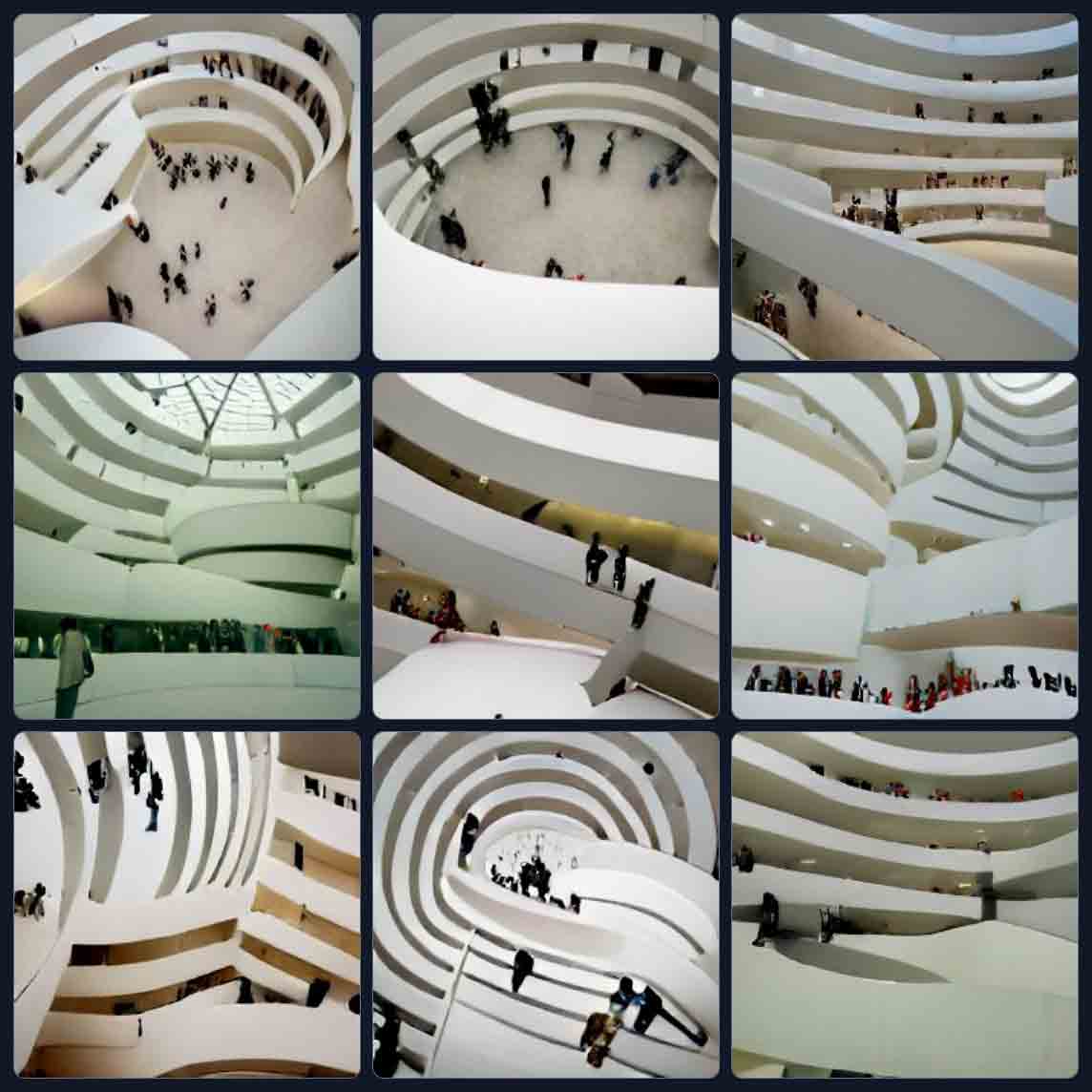

My alt text fed into Craiyon for the photo

My alt text fed into Midjourney for the photo

Google’s alt text fed into Craiyon for the photo

art gallery which includes interior views as well as a large group of people, Solomon R. Guggenheim Museum, The Metropolitan Museum of Art.I think if Google had not matched the image with the venue, Craiyon would not have come up with such a good set of images, even if they do not match the view of the original image. Bear in mind my original description did not name the venue.

Google’s alt text fed into Midjourney for the photo

art gallery which includes interior views as well as a large group of people, Solomon R. Guggenheim Museum, The Metropolitan Museum of Art.Even with that extra venue context, if you have never seen the Guggenheim then this would not be a good way to convey it.

Digital Illustration

A digital illustration

This is the alternative text for the image:

A photo illustration travel poster showing a cluster of metallic hot air balloons with spheroid gondolas floating above the opaque clouds of Jupiter’s atmosphere. Behind and above the balloons is a sweeping aurora of teal and purple against a black starry sky. The advertisement reads “Experience the mighty auroras of Jupiter” in metallic block text at the bottom of the poster.

I used these tools to automatically generate the following alternative text:

- Microsoft Office

Background pattern

- Microsoft Edge

Appears to be background pattern, Appears to say EXPERIENCE THE MIGHTY AURORAS OF JUPITER

- Google Chrome

Appears to say: EXPERIENCE THE MIGHTY AURORAS OF JUPITER

- Apple iOS VoiceOver Recognition

A screenshot of the video game with text and the image of a hot air balloon. Experience the mighty auroras of Jupiter.

I chose the most descriptive auto-generated alternative text and fed it into two image generation tools along with my original alternative text. You can compare and contrast the differences:

My alt text fed into Craiyon for the digital illustration

My alt text fed into Midjourney for the digital illustration

Apple’s alt text fed into Craiyon for the digital illustration

A screenshot of the video game with text and the image of a hot air balloon. Experience the mighty auroras of Jupiter.I figured a video game might provide more than just calling it a pattern.

Apple’s alt text fed into Midjourney for the digital illustration

A screenshot of the video game with text and the image of a hot air balloon. Experience the mighty auroras of Jupiter.I figured a video game might provide more than just calling it a pattern. Note how some of the balloons look like Jupiter.

Enhanced Photo

A digitally enhanced photograph

This is the alternative text for the image as written by NASA:

an undulating, translucent star-forming region in the Carina Nebula is shown in this Webb image, hued in ambers and blues; foreground stars with diffraction spikes can be seen, as can a speckling of background points of light through the cloudy nebula

I used these tools to automatically generate the following alternative text:

- Microsoft Office

A galaxy with stars

- Microsoft Edge

Appears to be a view of the earth from space

- Google Chrome

Appears to be: nebula, also known as nebula,

(when I arrowed down, then I heard)Nebula: James Webb Space Telescope, NASA

- Apple iOS VoiceOver Recognition

An illustration of stars in the sky.

I chose the most descriptive auto-generated alternative text and fed it into two image generation tools along with my original alternative text. You can compare and contrast the differences:

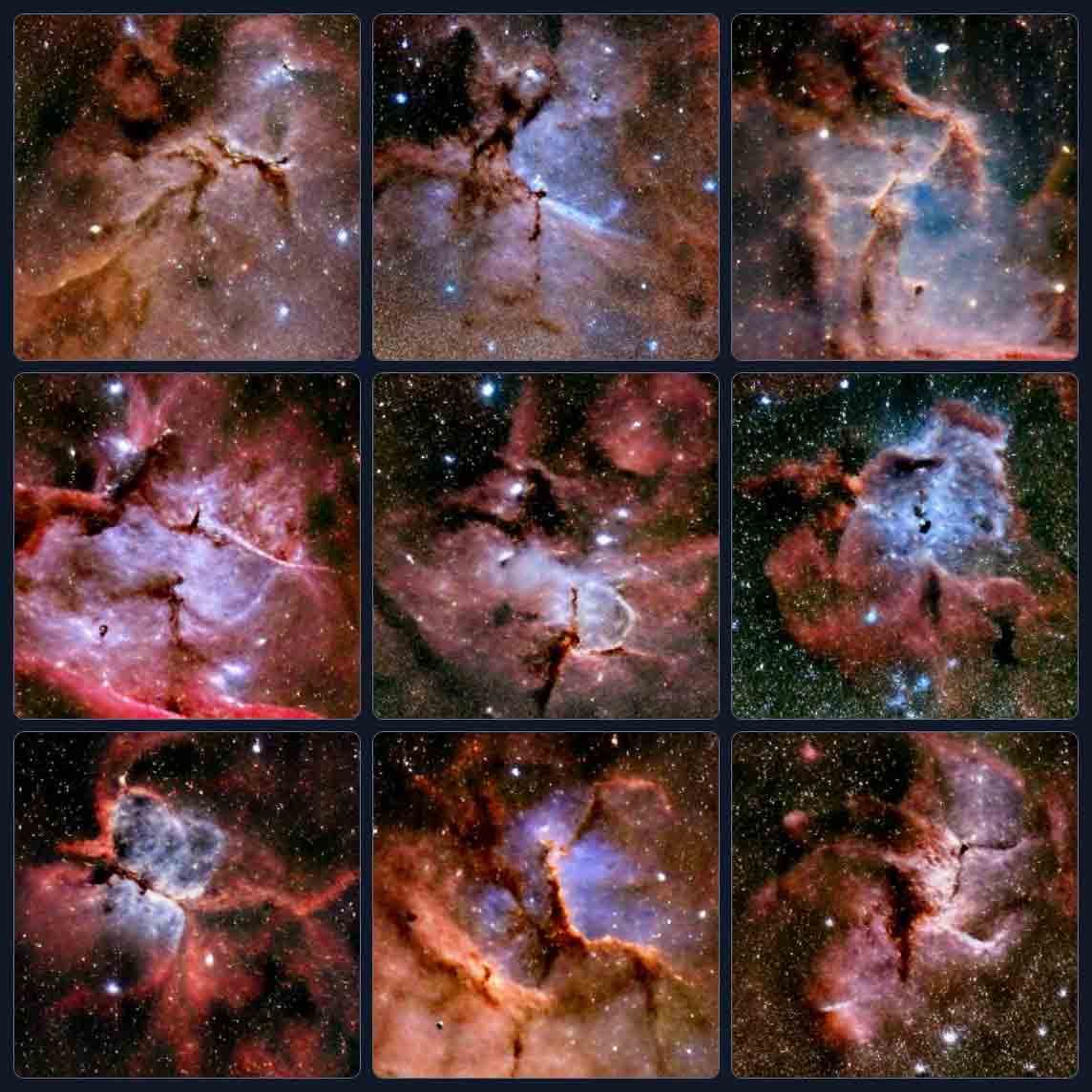

My alt text fed into Craiyon for the enhanced photo

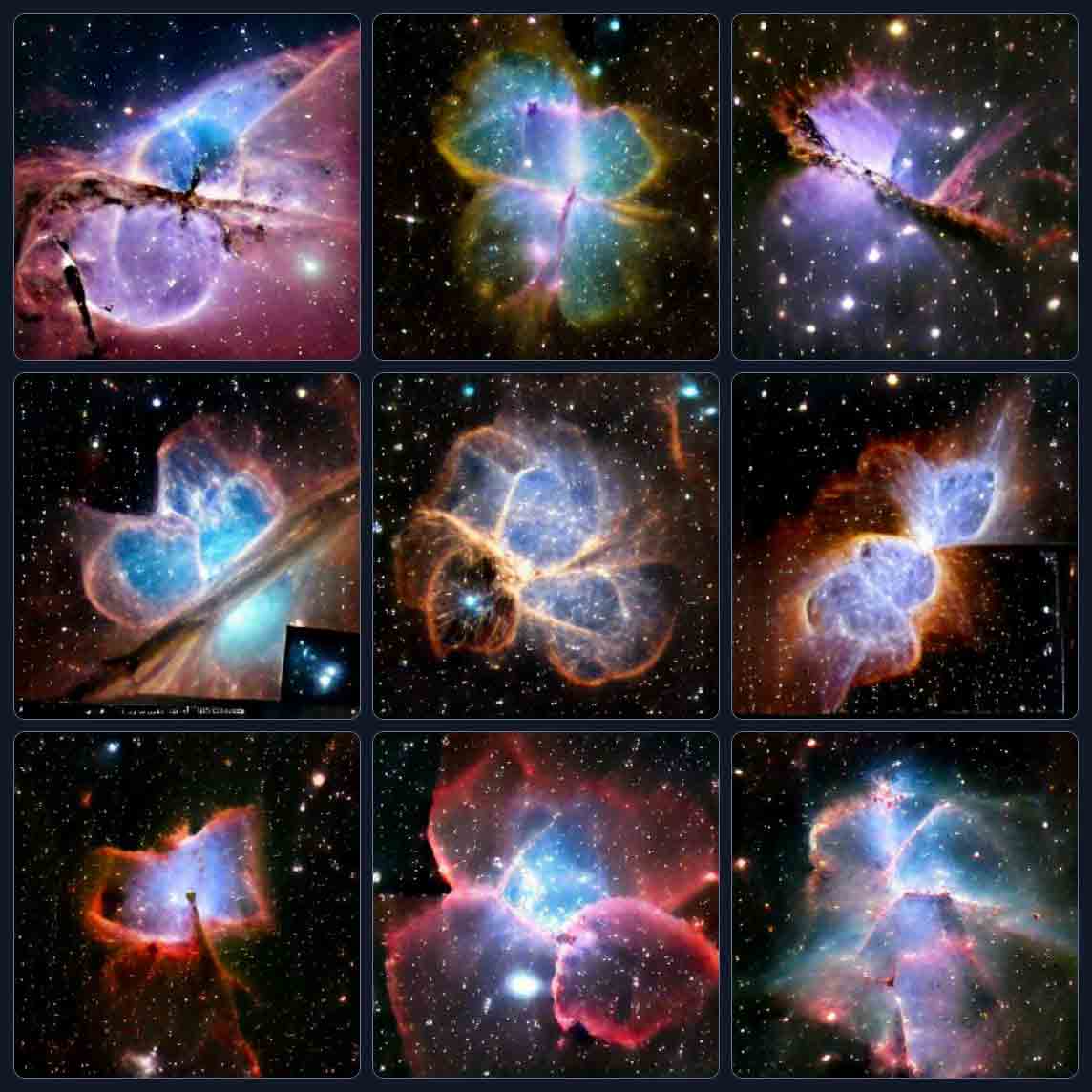

My alt text fed into Midjourney for the enhanced photo

Google’s alt text fed into Craiyon for the enhanced photo

nebula, also known as nebula, Nebula: James Webb Space Telescope, NASA.Which probably again explains why this looks like space, even if not the right space. I think those are planetary nebulae.

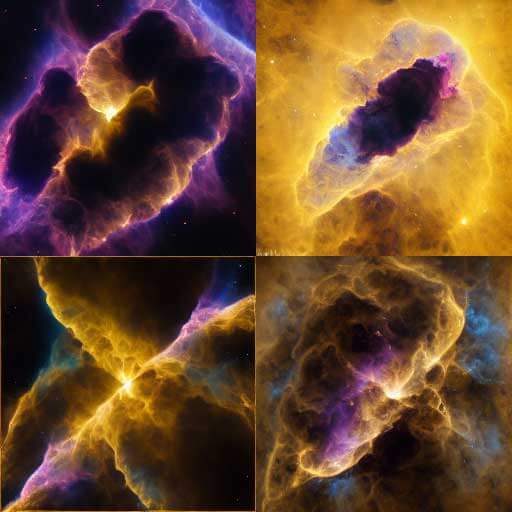

Google’s alt text fed into Midjourney for the enhanced photo

nebula, also known as nebula, Nebula: James Webb Space Telescope, NASA.I have no idea why it went with purple and yellow.

Painting

A painting

This is the alternative text for the image (used as plain text description in source, and artist provided in original context):

A night sky swirling with vivid blue spirals, a dazzling golden crescent moon, and constellations depicted as radiating spheres dominate the oil-on-canvas artwork. One or two flame-like cypress trees loom over the scene to the side, their black limbs curving and undulating to the motion of the partly obscured sky. A structured settlement lies in the distance in the bottom right of the canvas, among all of this activity. The modest houses and the thin spire of a church, which stands as a beacon against undulating blue hills, are made out of straight, controlled lines.

I used these tools to automatically generate the following alternative text:

- Microsoft Office

A painting of a city

- Microsoft Edge

Appears to be a tree with a city in the background.

- Google Chrome

- No description available

- Apple iOS VoiceOver Recognition

A photo of illustrations and a painting.

I chose the most descriptive auto-generated alternative text and fed it into two image generation tools along with my original alternative text. As a bonus for you, dear reader, I ran each twice, giving the context of the artist on the second pass. You can compare and contrast the differences:

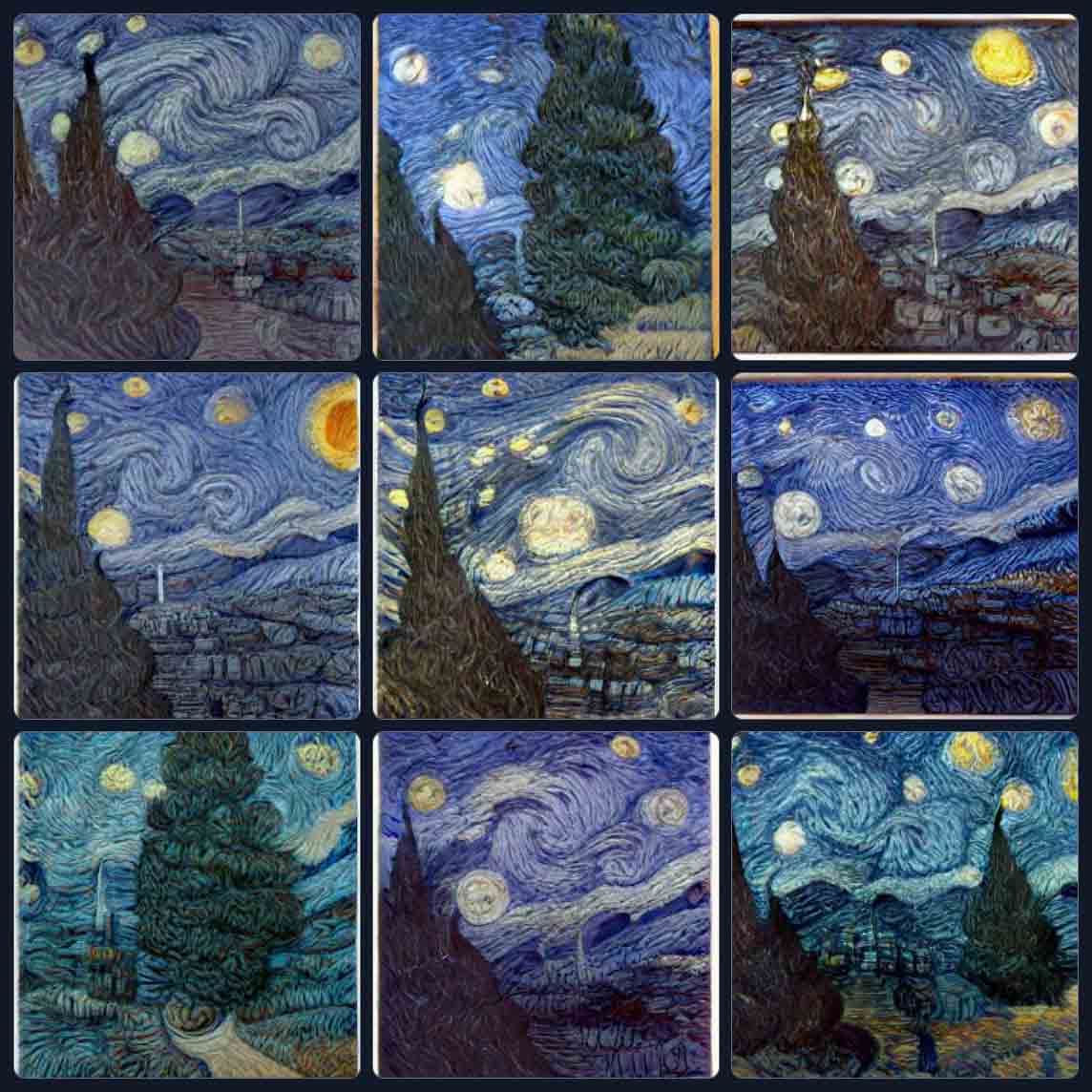

My alt text fed into Craiyon for the painting

A Vincent van Gogh painting of…

Edge’s alt text fed into Craiyon for the painting

a tree with a city in the background.Nowhere does it mention it is a painting, however. It makes sense that Craiyon would choose to render it as a photo.

a painting of a tree with a city in the background, in the style of Vincent van Gogh.

My alt text fed into Midjourney for the painting

a painting of a tree with a city in the background, in the style of Vincent van Gogh.

Edge’s alt text fed into Midjourney for the painting

a tree with a city in the background.Nowhere does it mention it is a painting, however.

a painting of a tree with a city in the background, in the style of Vincent van Gogh.

Takeaway

I hear the refrain all the time that AI will solve digital accessibility. I see overlay vendors claim (lie) that their own (non-)AI solution can describe images for users. I talk to devs who assert that browsers can now write the alt text. Social media managers who rely on Facebook to stuff “looks like sandwich” into every photo of a concert flyer. Content writers who press a button and let Microsoft Word report everything as a phone. And so on.

Sighted users have lots of prior visual experience. A history of seeing things and conjuring up mental images that can fill the gaps when graphics fail to load and only the image alt text shows. Blind and low vision users have some of this prior visual experience too.

It turns out AI image generators also have lots of experience. They are fed visuals and, as a result, can make somewhat accurate (if accidental) guesses about what an anemic description might represent. We can see it above from Craiyon with the art gallery.

But look at how far they diverge from the source image when even the best auto-generated description is their seed. Now imagine the worst auto-generated description is in use. Now imagine a user who does not have that deep mental database of imagery. Now imagine that image is integral to understanding the concept of the page or task. Something necessary for a job, or booking a doctor visit, or knowing where their kid is going.

Think of that dopey buck-toothed heart with the glasses that should have been kawaii toast. When we rely on a self-declared AI tool to generate our image descriptions for us, everything might as well be a dopey heart for all the good it does.

Wrap-up

The title of this post is the seed phrase for the opening image.

If you want to see what your favorite alt text generation tool might come up with using the same images I used, I made a Codepen. It might be easier to use the debug mode.

You can automatically generate alternative text from many tools, including:

- Microsoft Office: Add alternative text to a shape, picture, chart, SmartArt graphic, or other object,

- Edge when paired with Narrator: Appears to say: Microsoft Edge now provides auto-generated image labels,

- Chrome with a screen reader (I used JAWS): Get image descriptions on Chrome, and

- iOS through VoiceOver: Use VoiceOver Recognition on your iPhone or iPad.

Don’t.

Thanks to Eric Bailey for getting the VoiceOver image recognition text for me, since my crusty iPad Mini 4th generation is too old to run that feature.

Update: 9 January 2023

Some folks have decided AI-generated image prompts (for feeding into AI image generators) are somehow a better way to provide alternative text. Using the format of this post, I show maybe not: AI-Generated Images from AI-Generated Prompts

Update: 13 February 2023

Microsoft is planning to use OpenAI’s ChatGPT to generate abstracts for Bing search results (which prompted Google to push out a panicked response and hemorrhage $100 billion in a day). It is not clear how else Bing will use ChatGPT, but for now the Edge Dev release lets you see how the abstracts might work. I grabbed Edge Dev and checked how the OpenAI-generated abstract sounded for this post.

The abstract in the Bing sidebar:

The document is a critique of the use of AI tools to generate alternative text for images, and shows how they can produce inaccurate or misleading results. The document compares the original alternative text written by the author with the autogenerated alternative text from various sources, and then feeds them into two AI tools to generate images from text prompts.

3 Comments

[…] to AI-Generated Images from AI-Generated Alt Text by Adrian […]

Hi I would love to use some of these images as comparisons in our training on alt text. Would that be OK? Thanks

In response to . Hannah, based on your email address (and for those reading along who might assume this is a blanket statement), I have no issues with you using it in your training — with the following notes:

- the source images retain their licensing, and assuming I understand Craiyon and Midjourney terms, the generated images are all CC BY-NC 4.0;

- credit and a link back to this post are appreciated.

Leave a Comment or Response